Machine Learning pipeline

Machine Learning pipeline

Introduction

Developing a machine learning pipeline generally requires a custom approach. There is no off the shelf, well-architectured system that will work for all use cases. On the contrary each use case requires you to design the structure that will satisfy your particular requirements. The process for developing, deploying, and continuously improving them is quite complex. There are three main axes that are subject to change: the code itself, the model, and the data. Their behavior can be hard to predict, hard to test, explain and improve.

There are some aspects that are important to know before you start building the system. For example:

- Will you be doing online or batch predictions?

- How often will you need to train the model?

- Will your pipeline need to be fully or partially automated?

Use Case

For this example, we will take the use case of making vehicle recommendations for our Newsletter. The newsletter is sent out once per day and will contain a set of recommended vehicles. As additional information, we have a lot of new items added to our inventory each day. Therefore our system will have better performance if we train the model more often. Since the newsletter is daily, we can make a single prediction and have the data ready for the newsletter to use.

And of course, rather than perform these steps each day, it makes sense to automate this process and save our time for more exciting challenges. With this in mind, we can start answering some questions and determine which components we need and what the general structure of the pipeline will be.

Overview

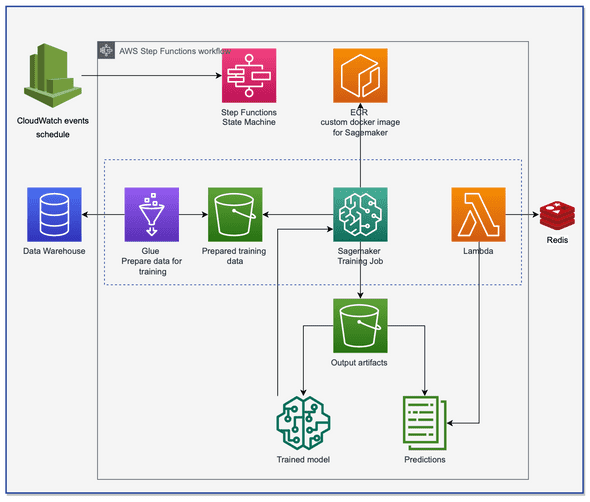

Even with a described use case and defined requirements, there can be many possibilities for design structures. We are extensively using AWS as a cloud provider. So, we will use SageMaker to automate training our model, and as storage for the temporary results, an S3 Bucket. Data preprocessing is a separate step and will be implemented outside of SageMaker. The final consideration is how we will deliver the predictions to other teams.

Pipeline Components

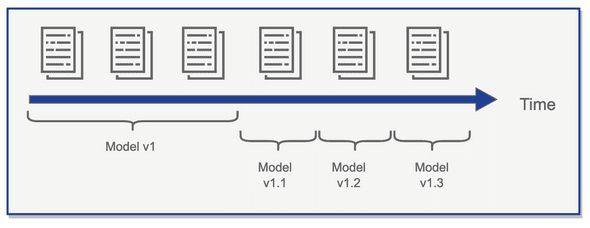

Let's describe each component in more detail, starting with the model. Ideally we would want the model to continue training on new data, rather than from scratch each time. This continual learning will allow us to update the model with less data. Instead of loading data from the database for the last few months, it will only need to fetch data from the previous day. This incremental approach of training the model will need much less compute power. It will also help us to avoid storing duplicate data, as each model update is trained using only fresh data.

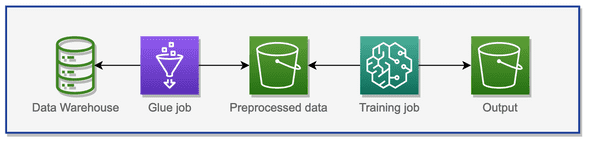

We are using SageMaker for training. We constructed it by loading a SageMaker Pytorch image, optimized for GPU and customized with our training configuration. During deployment of a new version, the image is pushed to ECR so that SageMaker can fetch the proper image and then spin it up inside our SageMaker space. After training SageMaker saves the resulting artifacts to an S3 bucket. But Sagemaker won’t include the ETL part and we need to put in place the data loading and processing part separately. For this purpose we will have a dedicated job in Glue. It’s a serverless data integration service for performing ETL workloads without managing any underlying servers.

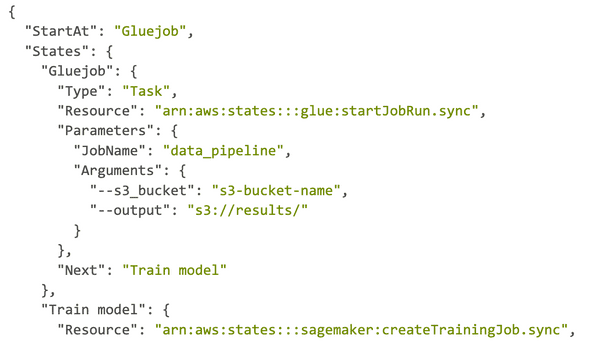

Individual AWS services are powerful tools by themselves, but chaining their outputs to the inputs of others services can be challenging without step functions. Step Functions coordinate different pieces of the pipeline and control which steps execute and when. It has a json format that represents a structure. States is an object containing a set of state/steps. The Step Functions service (SF) coordinates the order of execution.

By default SF sends the output of a previous state as the input of the following state. It provides service integration, which means it can connect and use other AWS services as steps. During execution there is an available context object, which contains info about the state machine and execution. This allows the workflows access to information about a specific execution. There is also an execution log, where you can find info about tasks and time spent in each step. This can be helpful for debugging or tuning your execution.

This is how all these services were brought together to implement the pipeline using AWS services. We are extracting data from the Data Warehouse to Glue jobs where the preprocessing happens. It then stores intermediate results (as a least effort solution) to S3. The Training job starts as the next step after the Glue job finishes. Keep in mind that the name should be unique within the region and account and it is a required field. In the Step Functions we also specify the S3 location for the output artifacts.

The next step is to set up a schedule. For this purpose we created a Cloudwatch event that triggers a state machine and executes the pipeline.

The last step in the pipeline is to load output data from S3 to a database that will be accessible through an API. This task is solved using Lambda. It is another AWS service that runs code based on the incoming request or event. In our case Lambda populates predictions from S3 to Redis. Redis is an in-memory structure store that not only supports a caching solution but also has the ability to function as a non-relational database. It will override any specified fields that already exist in the hash, or if it’s new, create it. It works well for our use case. Finally, we created an endpoint using FastAPI that fetches data from Redis and provides it to the other teams.

To create all these services manually and deploy them would be time consuming and difficult. Instead we are using Terraform. It is software that creates infrastructure and allows you provision resources quickly and consistently and manage them throughout their lifecycle. By treating infrastructure as code we can avoid costly mistakes that can come from manual setup of services.

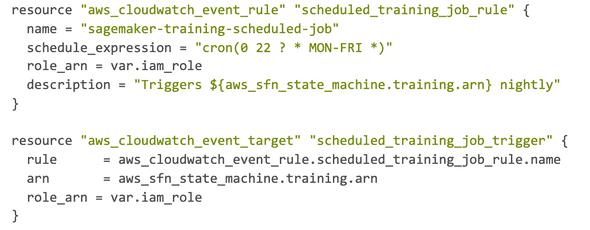

Here is an example of Terraform instructions that manages schedules in CloudWatch and triggers a Step Functions.

It’s very straightforward. We need to specify an event and rule, using the rule with a cron expression.

There are two steps that should be executed during deployment.

- Terraform plan: Checking the execution plan for configuration before creating/changing infrastructure.

- Terraform apply: apply the changes.

With Terraform set up, we have all the parts in place for our system.

Conclusion

This is only one example of how this pipeline could have been implemented. When it comes to creating your own pipeline, you will have different choices to make. Here are some of the lessons we learnt to help you when making those choices.

-

Step functions did an excellent job of combining services. It provides a lot of flexibility for creating different scenarios and logic flows. Overall a great choice for managing your pipeline.

-

It is a best practice to limit access to AWS services. In AWS it is the rule that anything not explicitly allowed is implicitly denied. Each service should get its own IAM policy to handle this. This can make it tedious to set up, but is worth it for the security and peace of mind it provides.

-

On the same topic of security, you should avoid putting sensitive data into S3. If you are using Jupyter notebook, be aware that it has access to the public internet by default. You should consider if this is really needed for your use case.

-

It is worth understanding the basic concepts for VPC’s. We didn’t discuss them here, but they are an essential part for running many of the services. Also some services will need to be in the same VPC to access each other.

I hope you find this helpful, when you start to implement your own Machine learning pipelines.

Our journey building the AUTO1 service inventory search using DB views

A short trip around the AUTO1 Application Cockpit data model

Building single-page applications